The general population is beginning to question the security of AI tools because they have been implemented in the daily lives of individuals. ChatGPT and other online sources may be of great assistance, but their random application may be a sort of unwanted surprises, particularly in the house. The concerns of privacy to data release, the venture of AI works may provide the openings that other users have never acknowledged to appear. These are not the products, which are aimed at replacing personal judgment and security awareness despite the fact they are supposed to be helpful. One must know the boundaries of AI and apply it in a reasonable manner. Subsequently, overreliance on AI can provoke anti-socialism under the conditions of self- and domestic security.

Exchange of information may pose threat of privacy

Most of the users will be irresponsible when communicating with AI. This may either be in terms of routines or residences or home routines. In the long term, this information can develop a complete profile. Being aware of what you post aids in preservation of privacy.

AI Is Not a Secure Vault

ChatGPT is not built with a scheme of keeping the personal data confidential. It is dangerous to share passwords, security codes or personal record. Secure passwords and encrypted storage are not substitutes to AI tools. Bring up, not keep, guard.

Excess dependence Can weaken Reality

The over exploitation of AI will lower the level of personal decisions. The users will no longer be able to doubt and question information. This tendency can be brought to the security related decisions. One must also be on guard to defend his home.

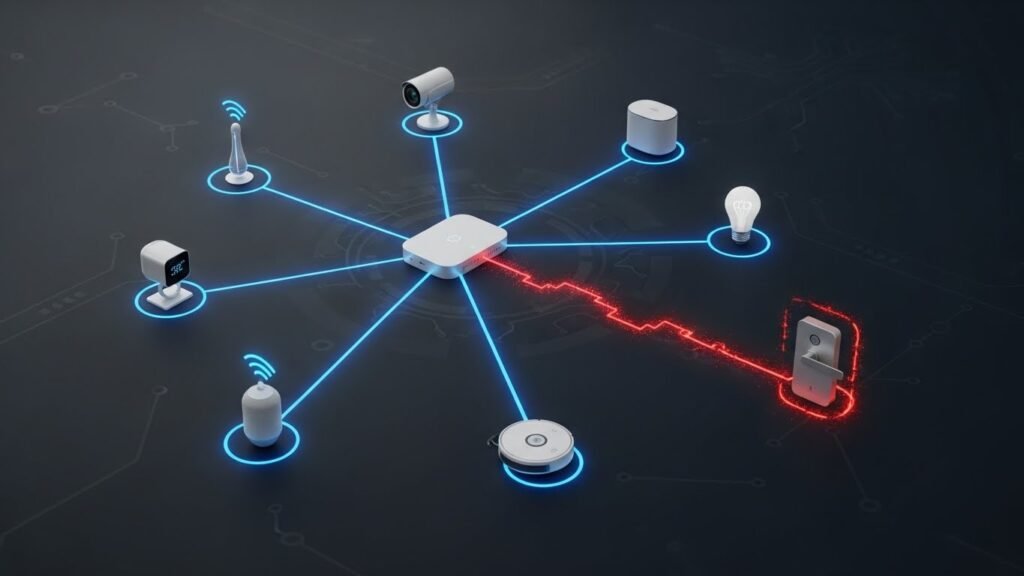

Hazards of Smart Home Integration

There is a higher possibility of AI working with smart home devices. The vulnerabilities may be in the form of poor configuration or incompetent security. Unless properly secured, the hackers can use the systems that are connected. It must be updated and the passwords decent.

Unwise decisions may be made by false Data

The application of AI is positive but not flawless. Safety in reaction to bad advice can be a problem. This is particularly hazardous in the event of dealing with security systems. Do not think of simple facts that can be found the aid of credibility-free sources.

Publication of Data via interviews

Even the casual discussions might turn out to demonstrate the behavioral directions. These trends are sensitized when they are raised. It should be more careful to utilize data in such a manner that will cause the users to be more alert. Minimization of unnecessary information is a less risky move.

False Sense of Trust

It could be safe and personal relations with AI. This assurance is likely to encourage the users to over share. It is also important to remember that AI is neither a person nor a human, it is a tool and this is what is likely to allow keeping boundaries healthy. There should be a balancing of mistrust and apprehension.

Children and AI Safety

Children can also communicate with AI without knowing the threat of privacy. They can just leave personal or domestic information posted without their knowledge. It is important that the parental guidance and supervision exists. Early years should be taught about the question of digital safety.

Significance of well laid down Digital Habits

Digital hygiene will reduce matters concerning security threats. This involves frequent updates, passwords and privacy knowledge. The habits should be able to accommodate the use of artificial intelligence and not be substitutes. Smart usage ensures safety.

Using AI Responsibly at Home

ChatGPT can be discussed as an interesting and strong tool provided that it is considered wise to use it. Do not keep confidential, do not do tips sacking. Originality of your existence in the internet. With its negligent usage, you would be in a position to enjoy the fruit of AI without jeopardizing your home.